Back To The Beat (AKA Totals)

Ok so...we're going to use this space to discuss some of our algorithms and how we've arrived at the specific implementations used in our code. Although we write in C#, we'll keep the conversation at a relatively high level and refrain from referencing particular code blocks. Since we've made no mention of how we build drum patterns in the other pages, that seems like like a good first entry.

The word 'Techniques' is an important one to us - it is actually a namespace within the Aleator application that contains all of the phrase building code. You may notice when listening to Facets that there really are no driving rhythms at all. Most of the beats you will hear are either (for lack of better terms) bouncy or slower, almost reggae influenced. This is because we simply haven't written an algorithm to generate those more driving (read: rock) rhythms.

As of this post, we have two rhythm techniques - 'Bounce' and 'Vibe'. These are both classes that derive from a Technique base class that houses some of the common properties and methods. Let's concentrate on the latter of these two.

As mentioned above, the Vibe technique was really written to generate Reggae influenced drum patterns. If you know anything about Reggae drumming, you know that those patterns tend to fall into one of three groups: One Drops, Rockers, and Steppers. You can find all of the history associated with these riddims on the interwebs if you so desire, but we will do here is briefly describe what differentiates these grooves from each other and how we seek to represent them in our code. For all of these, our approach to the hi-hat is to start with 8th notes and vary it from there by adding or removing a few, or perhaps a combination of the two. You really have a lot of wiggle room with the hi-hat.

The name One Drop comes from the fact that only one beat tends to be emphasized when playing rhythms of this type - the 3 (3rd beat in the measure if you are musically challenged and still reading this). This is usually executed with a kick, a rim shot or maybe both. You can play around with other rim shots occasionally, but the important thing is that the heavy emphasis is on the 3 and the 3 only. The Rocker's beat adds emphasis on the 1 (making the 1 AND 3 important), and the Stepper's beat emphasizes all four beats in the measure. Again, this is usually happening with the kick, but you can mix in rim shots at different points in the measure to put some sauce on there. Just for reference...

One Drop - Legalize It (Peter Tosh)

Rockers - Sponji Reggae (Black Uhuru)

Steppers - Exodus (Bob)

Its the interpretation of these guidelines that gets tricky. If we are employing the Vibe technique, we want the resulting beat to be somewhere in the neighborhood of one of the types described above, but we don't want it to be exactly the same all the time. It also needs to be reiterated that these are only guidelines - a drummer can of course do whatever he or she wants. So we try to guess where kicks, snares and hi-hats will fall using probability.

The kind of rhythm that will accompany a particular phrase is determined randomly when the phrase is built. Obviously if you were playing in a live setting with other musicians you would never work this way, but we are in the business of chance. If the type of beat to be generated is a One Drop, Steppers or Rockers beat, the Aleator knows to use the Vibe class to build it. The first thing we need to do when building any rhythm phrase is determine the number of kick, snare (or rim shot), and hi-hat notes that will be played. Within the Vibe technique, we use a normal distribution random number generator to get the kick total. A continuous generator is used for snare and hi-hat totals, but that's another story.

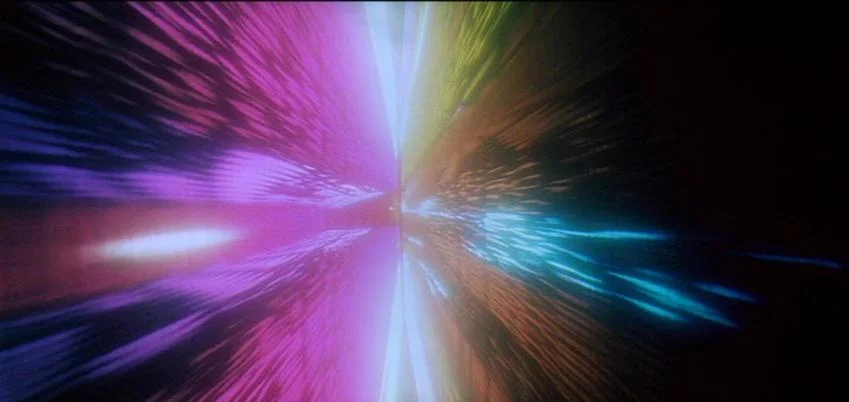

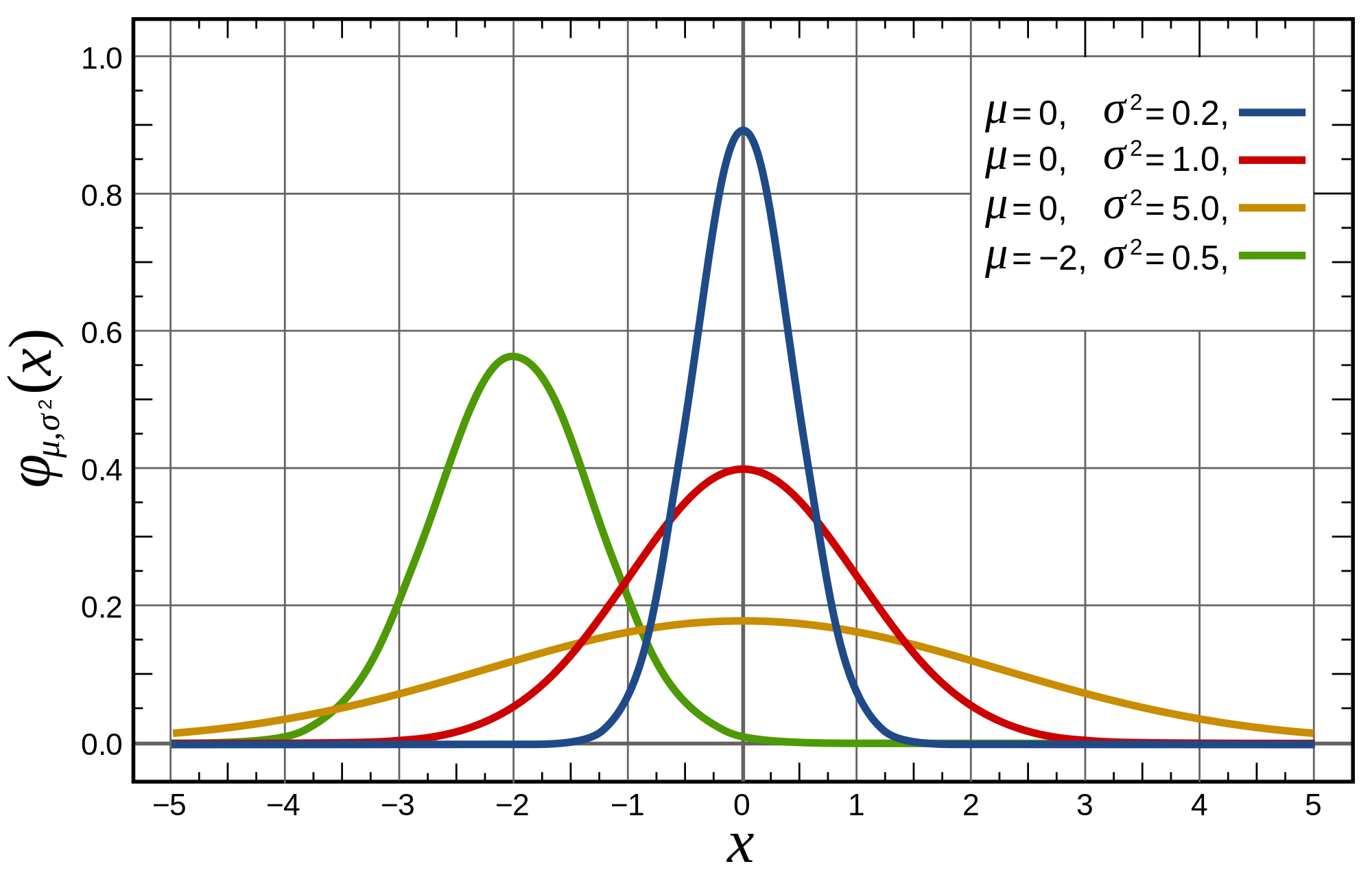

Normal distribution is just your standard bell curve: